It's been 5-months since my last blog post! In all actuality I simply burned out there for a while. I did manage to finally upgrade my CPU from a dual-core to a quad-core, allowing much better testing and enhancement of Bitphoria's multi-threading system. Many things have been added to Bitphoria since my last blog post.

I finally decided to add in a world-wide fluid dynamics simulation system with LOD to minimize computation. This effectively serves as a sort of 'windmap' for the world - and allows objects to either drag the 'air' around, pulling other objects, dynamic meshes, and particles around. Entities can also cause a momentary change in pressure at their location, for blast or black-hole vortex style effects that actually affect smoke and entities surrounding them. Rockets can now leave swirling trails of smoke, and cause particles to wisp around as they zoom through a cloud of them. It's pretty neato, but purely a superficial effect. It's something I've wanted to implement into Bitphoria since I first sat down and sketched out some ideas I wanted to see before I even started writing the engine.

I've also added signed distance function primitives to the procedural modeling stuff for easily constructing polygonal, wireframe, and point cloud geometries for entities - CSG style. This has made it much easier to model more interesting entity appearances, and now scripters aren't forced to plot individual vertices to create triangles.

Instead of explaining it much further I'll just show you a bunch of development screenshots from when I was working on the SDF modeling stuffs:

|

| When things were first starting to work: plotting points for individual SDF voxels - sized according to the 'density' of the voxel they represent. |

|

| Utilizing the same voxel triangulation code to yield triangle mesh geometry from a SDF model. |

|

| In my attempt to add the ability to smooth an isomesh per the distance field, a lot of things were going wrong (these are supposed to just be smooth spheres). |

|

| A sphere that hasn't been smoothed, showing the base isomesh. |

|

| A ring of spheres blend-merged and the result smoothed over. |

|

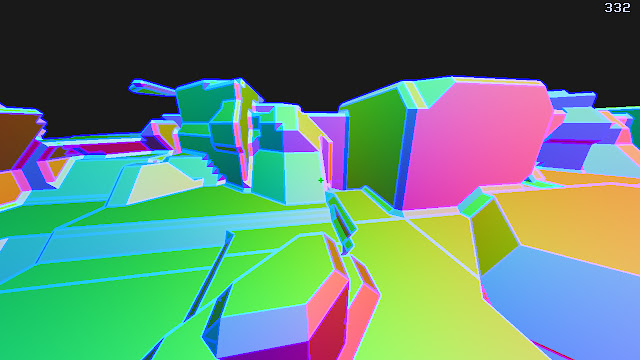

| A screenshot of the last of the testing/development game before I started scripting a new base game from scratch. |

A bunch of other things have been added to Bitphoria as well, but I haven't touched it in at least 2 months and it's currently not something I'm particularly motivated to pursue. After making all the progress that I did on Bitphoria a few months ago I began scripting a base/default game that I would then use as a template for creating various game modes to release with the next version.

The base game is a sort of deathmatch game with simple AI drones and obstacles and hazards for players to negotiate while battling it out with one-another, which always seemed more interesting to me than just raw PvP deathmatch gameplay. Anyway, I just didn't find working on it rewarding anymore. I can come up with hundreds of little ideas and mechanics, knowing how to go about implementing them by exploiting the capabilities of Bitphoria's scripting system, but it just doesn't excite me like it did when I was younger. Back then I was working with the Quake engine. I'm sure scripting stuff in Bitphoria would be a blast for many other young spry minds out there, but I'm not of a mind to seek those kids out, even though they were what I was thinking of when I designed the whole thing.

My goal has always been to make enough interesting stuff to show off Bitphoria's capabilities as a platform for creating, sharing, and playing custom games with other people - and hopefully have it be inspiring enough to motivate people to engage their own creative minds within the paradigm Bitphoria's scripting system provides. Well, as it stands, this probably won't be happening any time soon. I've had to make my peace with this fact over the last few months. I've been struggling to allow myself to work on anything else or pursue any of my other passions, not berating myself for letting Bitphoria development go idle. My resolve has been to look at this situation knowing that I owe it to myself to do what I must to take care of my own mental well-being and *let myself* pursue other projects and passions because nothing good comes about from sitting around not working on anything else purely out of guilt.

Yes, I wish that I could knock out Bitphoria in "record-manic-stay-up-all-night-not-caring-about-anything-else-in-life" time, which was naively my plan from the beginning, but it's just not in the stars. Am I lazy? Eh.. But if that were the case I don't think that I'd be feeling like there's not enough time in the day to work on what I *do* want to work on, especially after having overcome the self-inflicted shame I'd been enduring. I was tempted to release Bitphoria completely FOSS, just dump the code on the interwebs, and abandon any and all aspirations of trying to monetize it. I'd just be giving all of my work away for free. Alas, me lady talked me out of it, and explained that I should just let it sit until I was ready to come back to it. So that's what the plan is.

|

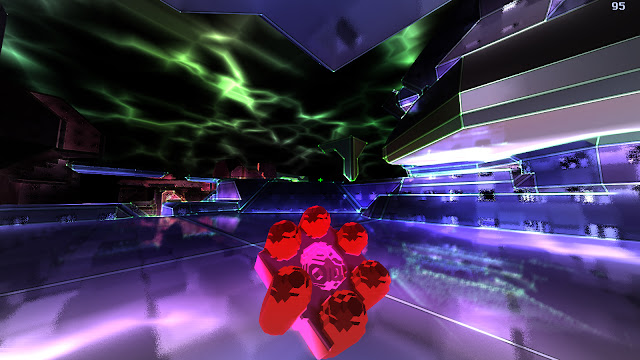

| Bitphoria in its current form. |

I've always been excellent at arcane technical pursuits and hacking away at them into the night, even now at thirty years old. But as far as actually designing a fun game or dealing with PR and promotion are concerned I am seriously lacking in drive and/or spirit. With recent developments I've become more inclined to focus on keeping my creative spirits high and working on what I love to work on: tackling difficult algorithmic problems. I've pretty much resigned to being the Wozniak to someone else's Jobs. I haven't met my 'Jobs' yet, and I hope I do someday, because I think that I have a lot to offer to and share with the world, and lack the ability to really get it out there.

|

| The good old days. |

In my relative slump I did manage to muster the gumption to start playing around on my CNC once again - the product of yet another 'abandoned' project that I had begun feeling guilty about for allowing to sit untouched and unloved for so long. It's really nice to have something to work on with my hands though :D

I've since explored a few ideas and have somehow finally convinced my wife that it's a financially worthwhile pursuit - making stuff on the CNC - which doesn't require dealing with nearly as many customers as our current crafting products do with our online business. We could be selling fewer big-ticket top-dollar high-end CNC-milled items rather than many cheaper smaller decorative items. In other words we could be making more money for less work, and deal with less customers, if we both transitioned our business toward producing large quality works as a team.

It would definitely be nice if we could spend more time together again like the old days, and I see CNC projects as being the nearest of several keys to unlocking that future for us, but it must be as a team. I don't believe she's the Jobs to my Woz, but I do believe we have the potential to achieve great things together. It has worked thus far with our online business, and I feel that she's fully capable of meeting me half-way while we engage a new medium together.

I've also been sketching out and outlining some ideas for an old project my late father had proposed and actively tried to encourage me to pursue. I'll save the details on that for a later blog post.