I currently have three ongoing projects:

| Creating toolpaths in PixelCNC using its own logo as image input. |

PixelCNC:

My most recent endeavor, PixelCNC, was started at the end of summer 2017. It has since been released in an alpha early-access state, with a few big items left on the todo list in order to get it where I really want it to be.PixelCNC relies on image-based operations to generate CNC toolpaths from image input. A near-infinite speedup in toolpath generation could be had by moving the image processing code to GPU. With my expertise and nearly 20 years of experience with OpenGL this is not too much of a hurdle to overcome, at least as far as planning it out and solving the problem itself. The most difficult aspect is making the commitment to spending the time mentally exerting myself.

A larger and less clear goal would shift PixelCNC toward the realm of image editing and manipulation - where adding the ability to create and edit images as a whole new program mode would further remove the necessary step of using a separate dedicated program specifically for creating an image to feed into PixelCNC. The need for dealing with an image manipulation program could be reduced and/or eliminated, further streamlining the workflow for artistic CNC endeavors. That is, after all, the entire point of PixelCNC. I have a few ideas concerning what an image editing mode would comprise, including some things never before seen in an image editing program that lend themselves really well to sculpting depthmaps easily and intuitively - and would build on the existing image processing functionality I've already written.

One more decently sized feature I'd like to add is an auto-update system, which would be introduced once PixelCNC enters beta, and would free up users' time so that they no longer need to manually download and install updated versions of PixelCNC as they are released.

There's a bunch of other little things on the todo list for PixelCNC that aren't exactly along the path, and just require the time and effort. These comprise less consequential but still useful or handy things. A few to give you an idea are:

- Functionality to detect when a series of toolpath segments fit a circular arc within a given threshold and replace them with G03/G04 circular arc motions.

- User-defined presets for CNC operations, so users can quickly create an operation they use frequently without having to edit each parameter and rebuild operations from scratch.

- Defining rectangular/cylindrical stock shapes for confining generated toolpaths to. This is trickier than it sounds, simply because of how PixelCNC works.

- Inlay generation mode, which would build on the existing medial-axis carving operation to allow the creation of a negative carving - whatever operations that would entail - to perfectly fit over an existing medial-axis carve operation.

- Automatic G-code export by tool, which would build on the existing ability to toggle which operations are included in exported G-code, so that users could easily create CNC programs which will perform all operations concerning each tool individually.

- Polygon Operation: similar to the spiral operation, except the spiral would be an N-sided polygon so that toolpaths could be concentric triangles, squares, hexagons, and so on.

- Mesh export: allowing users to export the heightfield meshes that PixelCNC generates for visualization and certain CAM algorithms.

Another thing that I'm planning and setting up for is recording a sort of demonstration video for PixelCNC, which shows the entire process going from an image to creating a project in PixelCNC, defining tools, setting operations, using the simulation mode, exporting the G-code, loading it up into a CNC controller, and actually cutting stuff. This could also be cut down into a short and concise promotional video to serve as something the public could pass around to share the fact of PixelCNC's existence to efficiently and effectively get the idea across. I'm still deciding what exactly I want to actually demonstrate, because running the CNC is always a bit of an energy, time, and raw material commitment so I want to be sure of what I decide to do before I go ahead with the requisite expenditures.

Holocraft:

A less recent but related project, Holocraft, which is a much more esoteric CNC related pursuit consists of a program that is in a less user-friendly state of partial disrepair. I could fix it up a bit, and begin selling it as well, which was at a time the tentative plan. The real plan was to sell actual holograms, but the lack of access to a CNC capable enough of realizing that vision put a relative end to that for the time being. An old friend I recently got in touch with disclosed the fact that he's been working to set up a hacker space which possesses machines that could make my original dream a reality.Another idea with Holocraft is to incorporate it into PixelCNC instead, as an operation the user would generate a toolpath for on a loaded image. The trick there is that Holocraft specifically relies on 3D geometry input which it then generates toolpaths for forming reflective optics that will recreate some representation of that geometry when viewed under a point light source.

Decisions, decisions..

Bitphoria:

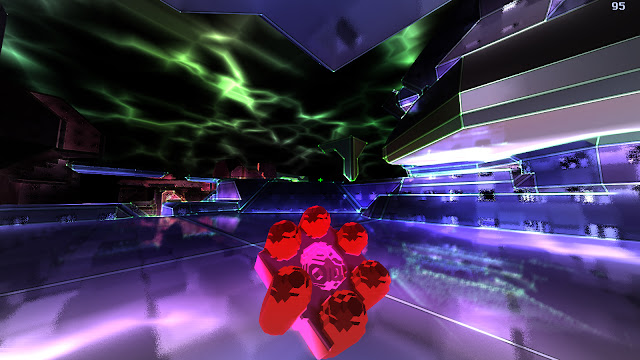

My biggest project, at least that I've invested the most time and energy into over recent years, is my game/engine Bitphoria. It's the culmination of a lifetime of learning all-things-gamedev, and virtually every novel game idea I've ever had. I take pride in the fact that it's written from scratch, and does things differently, but it's not quite "there" insofar as the visual polish and aesthetic are concerned. The actual 'game' aspect itself is largely incomplete, but it is basically ready to be made into a wide array of games. However, due to recent developments I've begun tinkering around with Bitphoria again, inbetween incremental PixelCNC updates, with a newfound vision for what it is meant to be. |

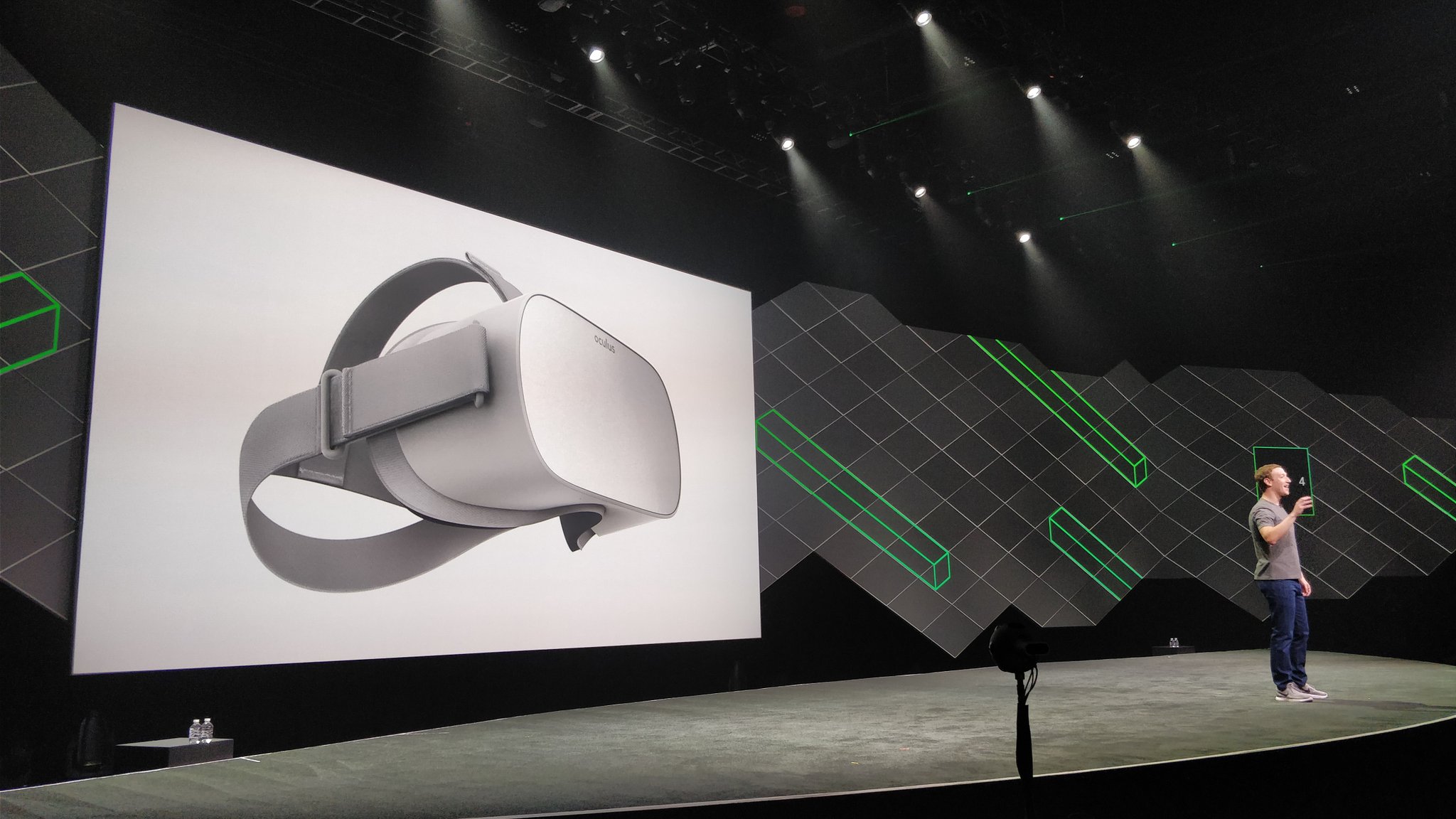

| The Oculus Go being announced at OC4 by Zuckerberg hisself. |

By 'recent developments' I am referring to the fact that I finally caught the VR bug back in October, when the Oculus Go was announced during their OC4 event down in San Jose (it was San Jose, right?). Something just clicked, and I decided that I would acquire a Go as soon as humanly possible and make 2018 the year I dive into VR development after wrapping up PixelCNC. I am convinced that the lower price point and removal of the requirement for owning a high end smartphone, PC, or game console will prove to be fruitful for the VR industry as a whole. More people will suddenly find low-end VR affordable, which will result in many more people being exposed to at least some form of quality VR - and not some shoddy makeshift excuse for VR like Google Cardboard (at least when used with phones that have poor sensor quality or clogged up Android systems that imbue apps with unbearable motion-to-photon latencies). Only then will they know the reason VR is here to stay!

|

| "First Contact", the Oculus Rift demo I tried at Best Buy. |

After a few months of watching PC VR headsets dropping in price I decided that I didn't want to limit myself to 3DOF (three degress of freedom) or the little directional remote controller, and started looking at different headsets. Eventually I found myself at Best Buy and demoed "First Contact" on the Rift. What a far cry from the DK2 that I had tried at a friend's house a few years prior! The Touch controllers made a world of difference, being able to actually interact with a virtual scene a universe away with my own hands was unlike anything I had ever imagined.

|

| You forget you're holding controllers, and feel like you're grabbing things. |

While my wife was ordering a new PSU on New Egg I told her to go ahead and order a Rift as well. I've been a proud owner of the Rift for a month now and have already been integrating the Oculus PC SDK into Bitphoria. There has been a lot of work that needed to be done, especially due to the fact that the vast majority of a game in Bitphoria is described in external text files, I needed to introduce some means for connecting entities to the controllers and responding to different input states with the buttons and thumbsticks.

|

| This is what scripting simple VR player flying controls to Bitphoria looks like. |

There's still a bit of work to go before it's fully integrated, at which point I can release Bitphoria as a means for people to quickly and easily script all manner of multiplayer games without having any real gamedev or modding experience. Ultimately I'd like to expand on the existing game bitfile system and circumvent the scripting system altogether by crafting a WYSIWIG game editor, which allows users to craft games directly in VR. Bitphoria would then be the first VR-based game making system! No more screwing around with Unity or Unreal, just fire up Bitphoria and start building games.

| Even if you already know everything, you have to learn how *they* do it! |

However, Bitphoria's codebase has begun showing its age already. The things that I wish I could've done differently are piling up, and make it difficult to work inside of. So, the plan for now is to just focus on making a single cool game out of Bitphoria, while promoting the scripting side of things to get people interested and involved in being able to make their own VR games with it. Ultimately, though, I plan to rebuild the engine from scratch - borrowing a lot of code and engine structure from the existing engine, but re-implementing everything more cleanly and with in-engine game editing in mind.

There are several components that would require specially designed and implemented WYSIWIG interfaces for crafting Bitphoria games:

- Entity Types - Describes each possible entity type, serving as a sort of template. Dictates the various aspects pertaining to a game entity, such as what physics behaviors they have, what effects flags they have set, their collision volume and its size, what entity functions various logic states can trigger, any ambient/looping audio, particle emissions, appearance, etc..

- Entity Functions - These are executable lists of instructions that produce specific entity behaviors as a result of different internal and external logic states or triggers that the engine detects through physics, player interaction, scripted timers and conditional statements becoming satisfied, and the like.

- Model Procedures - Lists of modeling operations which produce geometry for entity appearances by plotting out points, lines, triangles, and signed-distance function primitives for modeling voxel-based geometries with constructive solid geometry conventions. These can be made to vary in a number of ways with each generated instance of a procedure, allowing entities to not appear exactly identical to others of the same type.

- Dynamic Meshes - 3D point clouds of 'nodes' attached together using springs. Springs can be assigned procedural models to give the 'dynamesh' visual form and the appearance of multiple conjoined moving parts. Dynameshes allow entities to appear to have more dynamic physics interactions with the environment and other entities as well as allow for simple/crude animations. Otherwise entities would be restricted to appearing only as rigid static geometry.

- Entity HUD Overlays - Procedural models assigned to entity types to display various stats and visual indicators conveying the state of the entity. Entity state and properties can drive modifiers to animate color, orientation, size, and position of the models drawn to allow for a variety of interesting HUD elements (aka 'widgets') to be drawn over an entity in a player's perspective.

- World Prefabs - A more recent idea that I'm still toying with: world prefabs would consist of simple voxel models which the world-generation algorithm randomly places around the map per various modifier flags and statistical 'tendencies' specified for each prefab, providing some semblance of structure and design to worlds beyond what little is offered by the existing random plateaus/caves/pits. These could also have entity spawns placed in them so they can serve an actual function during gameplay.

Designing and implementing intuitive interfaces for users to define Bitphoria games with with - and have immediate feedback for quick iteration/turnaround time - that's a task unto itself. VR is a young medium that we're still becoming familiar with, and exploring the language of, so there's the added challenge of discovering what even works and what doesn't. I imagine that VR would allow for much more intuitive interfaces than 2D does, especially when it comes to crafting 3D content. We're still figuring it all out, all developers are, collectively. It's a bit of a wild-west.

Regardless, I believe it will be a powerful thing giving the average person the ability to easily create their own gaming experiences for VR with the right tools to enable them to quickly and easily produce quality interactive gaming experiences. Bitphoria's scripting system is designed to really reduce each possible dimension of a game to just a few simple options, but the number of dimensions and the freedom to connect up all sorts of pieces together is what allows for such a vast universe of permutations.

Ultimately, the goal has always been to create a system somewhat akin to Snapmap for DOOM, which coincidentally follows in the same vein as my original vision for Bitphoria - a platform for people to easily create and share their own mods/games. Snapmap's idea of a WYSIWIG editor trumps my weary plan for users to work in (relatively) tedious script files. Admittedly I was somewhat awe-struck and simultaneously irked when id Software unveiled DOOM at E3 2015, after I had already been working on Bitphoria for a year. "They stole my idea!" I'm just glad Snapmap was the last project on DOOM that Carmack worked on before his departure, as it was born of the community modding spirit which he was always such a proponent of.

|

| Editing some logic gates in DOOM's Snapmap. |

Anyway, that's pretty much that. There's also a bunch of things I need to revisit, such as the post processing effects system, and the windmapping stuff, as these really sopped up any remaining CPU and GPU that was previously left on the table. In the case of running in VR, however, they seem to push the envelope a bit too far to be viable - at least on my bare-minimum VR spec system. I'll have to buckle down and really push to keep certain things in there. Screenspace reflections? Likely out of the question now, but maybe I can hack something to work that provides a similar effect. It was always more of an aesthetic thing than an aspect to make Bitphoria have more visual realism. Particles and wind fluid dynamics perhaps could be moved to the GPU, but we'll see. They might just be effects reserved for the highest of system specs.

I should be able to re-engage at least some minimal post processing effects. My FXAA implementation was pretty solid, and surely would be faster than supersampling, and possibly faster than multisampling. I'll just have to see for myself. The postfx system also was responsible for final color adjustment, and also featured a really cool spectral tracer effect which particles and entities could leave overbright residual trails across. It was subtle, but it really accentuated the whole aesthetic and feel, making certain objects seem like they were really glowing blindingly bright, such as lasers and explosions. The windmapping really lent itself to the overall feel as well. Maybe I can figure out some kind of layered screenspace/frustumspace fluid dynamics solver, which would project onto the scene when particles and entities query the windmap. It was always purely a visual effect that wasn't intended to be used to actually affect gameplay-relevant entities, and it really gave a whole new dimension to the feel of Bitphoria. I miss my wind.

Conclusion:

Now I have a customer base with PixelCNC, customers who invested in it as early-access software with the promise of new features coming down the pipe. I owe it to them for their support to continue staying focused, and productive, on PixelCNC. As far as I am concerned, any supporters who put a financial investment in something should take precedence over anything else I may have going on.